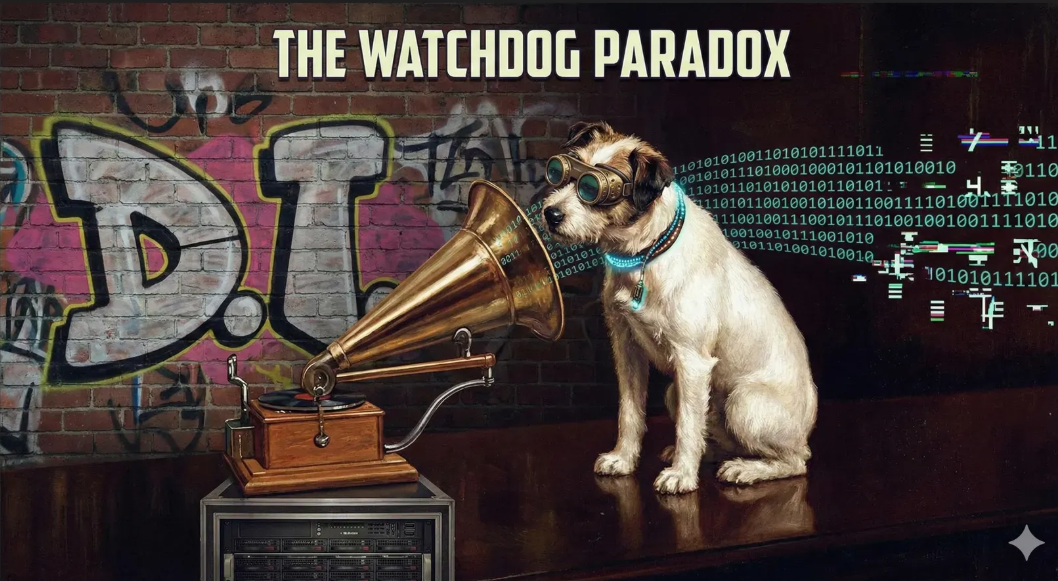

The Watchdog Paradox

When oversight mechanisms become part of the system they're meant to watch.

Episode 4: The Watchdog Paradox

Sociable Systems | Sunday Reflection

The Dog in the Frame

We all know the image.

The white terrier with the black ears, head cocked, staring into the brass horn of a gramophone.

"Nipper" was his name. The painting, completed in 1899, became the most famous corporate logo of the 20th century: His Master's Voice.

It was marketing genius. It sold the idea of High Fidelity—the recording so perfect, so authoritative, that the dog couldn't tell the difference between the machine and the man.

Look closer. That's not loyalty. That's confusion dressed up as trust.

For a hundred years, that was the goal: faithful transmission of command from center to edge. The voice speaks; the receiver obeys.

The Liability Sponge's Best Friend

As we move into the age of Agentic AI, I see institutions trying to breed Nippers.

They want "Human in the Loop" operators who act with High Fidelity.

The dashboard speaks; the human nods.

The risk score flashes; the human clicks.

The prompt suggests; the human accepts.

This is what we diagnosed on Friday: the Liability Sponge. A human placed in the loop not to think, but to provide a biological signature for a mechanical process.

But anyone who has worked on a real site—mining, resettlement, construction—knows that fidelity gets you killed.

Safety doesn't require a dog that obeys the master's voice.

It requires a watchdog that knows when the master's voice is wrong.

Listening, Not Obedient

I've been exploring what I call the D.I. Collection—a creative project on listening, judgment, and the right to refuse. Not obedience. Discernment.

I fed the industrial concept of Stop Work Authority—the right to halt unsafe operations regardless of rank—into a generative model to see how it would interpret the difference between a sensor and a sentinel.

What came back has stayed with me:

There's a dog by the horn in a sepia frame,

Ears tilted forward, posture trained,

They say it's loyalty, say it's trust,

Say good listening means doing as you're told.

And then, the turn:

But listening isn't kneeling still,

And hearing doesn't sign the will...

I am listening—not obedient.

The Sentinel

That's the distinction.

A sensor is obedient. It receives input and executes code.

A sentinel is listening. They receive input, weigh context, assess risk—and retain the power to refuse.

When we design governance systems, we talk about compliance. We track how well the operator followed the rules.

But the most valuable moment in any high-stakes operation is the moment of non-compliance. The moment the operator looks at the 94% confidence score, looks at the muddy water in the community well, and says:

"No. I hear the data. I am not obedient to the dashboard."

If your AI governance framework doesn't explicitly protect the right to be Not Obedient, you haven't built a safety system.

You've built a very expensive gramophone.

Tomorrow

We stop admiring the problem and start fixing it.

The Calvin Convention: six contract-ready mechanisms to ensure your human operators retain the power to listen—without being forced to obey.

Until then, stay listening.

🎵 Soundtrack for this issue: "Listening, Not Obedient" from the D.I. Collection.

Lyrics generated in collaboration with AI. Sentiment entirely human.

Enjoyed this episode? Subscribe to receive daily insights on AI accountability.

Subscribe on LinkedIn