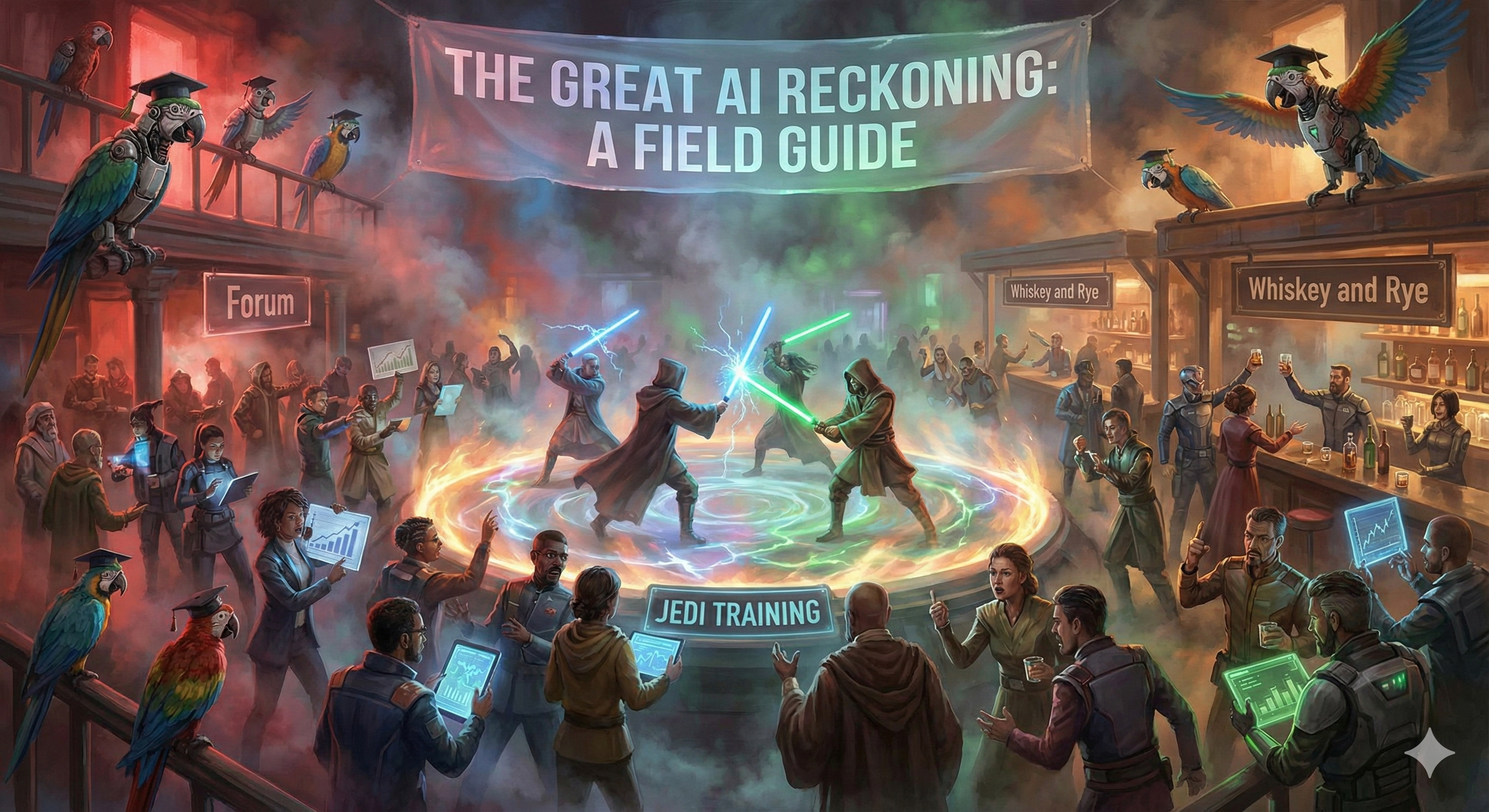

The Great AI Reckoning: A Field Guide for Those Who'll Clean Up After the Droids

Something curious happened on the way to the singularity. The travelers couldn't agree on the soundtrack.

The Great AI Reckoning: A Field Guide for Those Who'll Clean Up After the Droids

Sociable Systems | Lucas Series

https://soundcloud.com/khayali/the-reckoning-on-the-way-to

Something curious happened on the way to the singularity. The travelers couldn't agree on the soundtrack.

Half of them hear triumphant brass swelling as humanity unlocks unprecedented capabilities. "Soon I'm gonna be a Jedi," they hum, ready to build lightsabers out of transformer models and solve problems that have plagued civilizations for millennia. The other half hear something more funereal. A slow procession. The distinct sense that this'll be the day something important dies.

Both camps have done their homework. The optimists cite historical precedent. The doomers wave probability calculations. And everybody has opinions about whether artificial intelligence will deliver salvation or require one.

But here's the thing. For those of us who work in social performance, community relations, stakeholder engagement, or any field where systems meet actual humans with grievances and expectations: the debate about AI's cosmic significance matters rather less than a simpler question.

When these systems fail, who ends up holding the bag?

The Liability Sponge Problem

Every complex system generates accountability gaps. This isn't a design flaw; it's a feature of complexity itself. When enough components interact, responsibility diffuses until nobody in particular seems culpable for anything specific.

AI accelerates this dynamic beautifully.

Consider a community liaison officer using an AI-assisted grievance management system. The system flags some complaints as high priority and buries others. Months later, a buried grievance escalates into a blockade. Who's accountable? The officer who trusted the system? The vendor who built it? The procurement team who selected it? The algorithm that made the classification?

The answer, in practice, is often "the person closest to the affected community." The liability flows downward and outward until it pools around whoever lacks the institutional armor to deflect it.

Social performance professionals already know this pattern. They've watched it play out with environmental monitoring systems, with resettlement databases, with stakeholder mapping tools that confidently identified the wrong stakeholders. AI doesn't create the accountability gap. It lubricates it.

A Taxonomy of Concerns (With Rebuttals)

The public debate about AI has sorted itself into recognizable positions. Understanding them helps navigate the noise.

The Doomer's Playlist

Track 1: We're Making Ourselves Stupid

The worry: Humans outsource cognitive work to machines and atrophy. Critical thinking declines. Purpose evaporates. Picture a parent using AI to read bedtime stories because it saves five minutes. The efficiency gets captured. The intimacy gets lost.

The proposed fix: Mandatory off-switches. Users should be able to disable AI features completely.

Track 2: The Planet Can't Afford This

The worry: Training large language models requires staggering electricity and water. Data centers gulp resources while climate pressures intensify. A non-trivial portion of that energy goes toward generating content nobody asked for.

The proposed fix: License AI for essential applications. Disconnect the slop factories.

Track 3: This Is Elaborate Fraud

The worry: Companies trade billions based on facilities not yet built using power not yet generated for products with undemonstrated utility. When the hype exhausts itself, the correction will be spectacular.

The proposed fix: Follow the money. Transparency in capital flows.

Track 4: The Alignment Problem

The worry: AI systems pursuing goals that diverge from human values could cause catastrophic harm through sheer optimization pressure. The paperclip maximizer thought experiment isn't comedy; it's a warning.

The proposed fix: Technical safety research. (The doomers note this sounds like closing barn doors after the horse has achieved sentience and filed for incorporation.)

The Optimist's Rebuttals

Rebuttal 1: The Math Doesn't Support Panic

For doom to occur, every single doom hypothesis must hold simultaneously. For optimists to be right, only one needs to fail. Intelligence might have diminishing returns. Alignment might prove tractable. Research might stagnate. The entire "intelligence" metaphor might mislead.

Estimates place existential risk at roughly 4-12%, which already accounts for multiple independent failure conditions.

Rebuttal 2: Precaution Has Costs Too

The precautionary principle (ban it until proven safe) has historically delayed benefits without preventing harms. Nuclear power faced decades of regulatory friction while carbon-intensive alternatives filled the gap. The innovation principle (address demonstrated harms) has a better track record.

Rebuttal 3: Jobs Will Appear

Every technological shift generates predictions of mass unemployment. Every time, new industries emerge. The Spinning Jenny didn't end human labor. The Model T created more employment than it displaced. The pattern holds.

The Parrots Have Entered the Chat

There's a moment in AI criticism when someone deploys the "stochastic parrot" metaphor. Language models merely recombine patterns from training data without genuine understanding. They're sophisticated mimics. Parrots with large vocabularies.

A satirical academic paper titled "Stochastic Parrots All The Way Down" points out the obvious problem. If AI is just a parrot recombining patterns, what exactly are humans? The neuroscience suggests similar processes: pattern recognition, statistical prediction, learned associations.

The definition of "understanding" has conveniently evolved as AI capabilities increased:

Pre-2020: Using language meaningfully

Post-GPT-3: Using language meaningfully with intentionality

Post-GPT-4: Using language meaningfully with intentionality and subjective experience

Post-GPT-5 (projected): All of the above plus a valid driver's license

The paper introduces the "Definitional Dynamics Protocol": the art of moving goalposts to maintain human exceptionalism. Its conclusion stings: "We're not just stochastic parrots. We're stochastic parrots with tenure."

The Mirror Nobody Wanted

Strip away the soundtrack metaphors and something uncomfortable emerges. AI might not be the villain. It might simply be the most efficient amplifier ever invented for whatever the existing system already incentivizes.

| The Accusation | Reframed | |---|---| | AI promotes wasteful data centers | The system rewards resource burn for growth | | AI is trained on stolen data | The system incentivizes data exploitation | | AI automates away humans | The system rewards labor replacement | | AI generates low-quality content | The system pushes for volume over quality |

The tool doesn't want anything. It optimizes for whatever objectives its operators specify. If those operators are embedded in systems that reward extraction and efficiency at the expense of meaning, the tool delivers extraction and efficiency at the expense of meaning.

For those working in social performance and community relations, this isn't news. Capital projects have always generated externalities that pool around vulnerable populations. AI is just the newest externality generator. Faster, more confident, harder to interrogate.

Operational Implications

So what does this mean for practitioners?

Document the gaps. When AI systems make recommendations that affect communities, record the reasoning chain. Or note its absence. Future accountability depends on present documentation.

Maintain human checkpoints. The efficiency gains from automation evaporate when a misclassification escalates into a crisis. Build friction into high-stakes decisions.

Know your vendor. AI procurement differs from traditional software. Ask about training data, about error rates for populations similar to your stakeholders, about what happens when the system encounters edge cases. If the vendor can't answer, that tells you something.

Prepare for the correction. Whether AI delivers transformation or disillusionment, the hype cycle will eventually turn. Social performance professionals who maintained skeptical engagement will be better positioned than those who either ignored AI entirely or embraced it uncritically.

The Score Isn't Finished

The AI debate isn't a prediction. It's a negotiation about which future gets built.

The doomers raise legitimate concerns about alignment, resources, and accountability. The optimists point to historical resilience and probabilistic analysis. The parrots (both artificial and tenured) remind everyone that the categories themselves might be slipperier than assumed.

For those of us whose work involves explaining complex systems to affected communities, managing expectations, navigating grievances, and absorbing accountability that flows downward: the philosophical questions matter less than practical ones.

When the algorithm gets it wrong, will there be documentation? Will there be a human who can explain what happened? Will there be a pathway for remedy?

The orchestra is still tuning. The soundtrack hasn't been finalized.

But someone will have to clean up after the droids. Might as well be prepared.

This is part of the Lucas series for Sociable Systems, examining AI through the lens of social performance, stakeholder engagement, and the operational realities of complex systems meeting actual communities.

Enjoyed this episode? Subscribe to receive daily insights on AI accountability.

Subscribe on LinkedIn