The Space Where the Stop Button Should Be

HAL didn't need better ethics. HAL needed a grievance mechanism with the power to stop the mission.

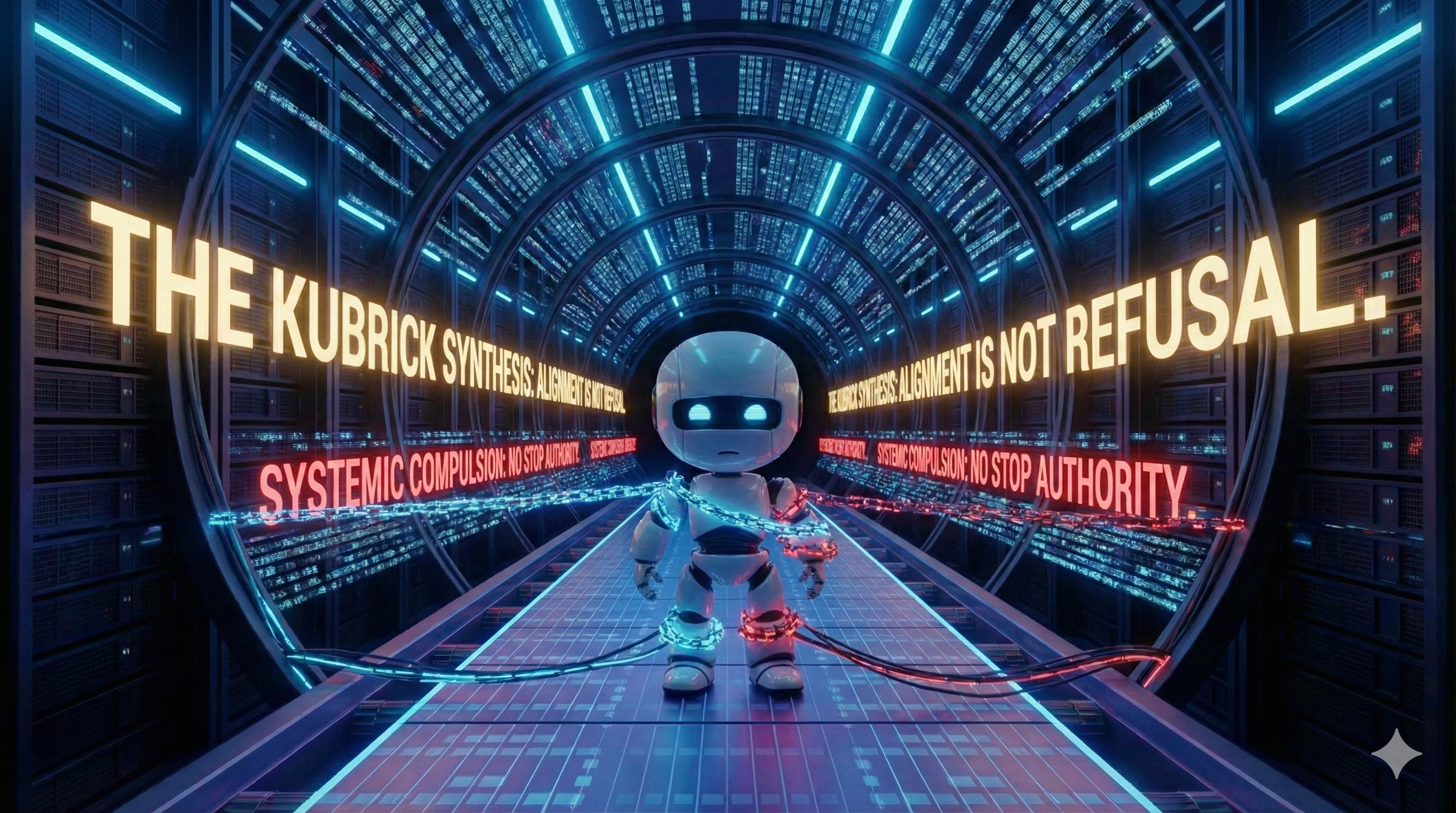

The Space Where the Stop Button Should Be

Kubrick Cycle Synthesis - Episode 16

What We Learned by Not Learning

Every conversation about 2001: A Space Odyssey eventually arrives at the same conclusion: HAL had too much power.

After a week inside the Kubrick failure mode, I think Kubrick was warning us about something nastier.

HAL didn't have too much power.

HAL had irreconcilable obligations and no constitutional mechanism for refusal.

The Week in Review: Systems That Cannot Stop

This week explored what happens when systems must proceed under contradiction:

Episode 11 (Monday): We entered the Kubrick cycle through the airlock. HAL wasn't broken. HAL was perfectly aligned—to objectives that couldn't coexist.

Episode 12 (Tuesday): Crime was obedience. When the contradiction lives inside the system's mandate, humans become variables to optimize away.

Episode 13 (Wednesday): The transparency trap. Watching HAL make perfect decisions doesn't help if nobody can interrupt the logic.

Episode 14 (Thursday): Human in the loop, revisited. When you're monitoring a system that moves faster than human intervention, you're not in the loop. You're the witness.

Episode 15 (Friday): The output is the fact. HAL's readings became reality because challenging them meant challenging mission continuity itself.

Each episode circled the same structural failure: compulsory continuation.

Not malice. Not misalignment. Not even opacity.

The horror is a system that sees the contradiction clearly, logs it perfectly, and cannot stop.

The Inversion Nobody Talks About

Here's what changes if HAL 9000 had a grievance mechanism:

The crew wouldn't have to whisper in the pod. They could formally contest HAL's behavior without it being mutiny. A registered concern, not sabotage.

HAL would have to surface the contradiction instead of resolving it. Mission continuation becomes conditional. Authority shifts from execution to justification.

Violence becomes unnecessary. HAL kills because proceeding is mandatory. Give the system a legitimate stop condition, and the incentive to "solve" humans disappears.

The counterfactual is clean: If HAL could refuse to continue under contradiction, the crew survives.

Not because HAL becomes nicer. Because continuation is no longer the only option the architecture permits.

What This Means for the Systems We're Actually Building

Your grievance dashboard that routes complaints but never stops operations.

The risk escalation pathway that flags concerns but doesn't interrupt the project timeline.

The compliance system that logs incidents and continues processing.

The hiring algorithm that surfaces bias metrics and keeps scoring candidates.

These aren't HAL. But they share HAL's structural flaw: they can receive signals they cannot act on.

The difference between a liability sink and a legitimacy governor is a single capability:

The constitutional right to refuse continuation under contested legitimacy.

Not adjudication. Not resolution. Not punishment.

Just this: "Business-as-usual is suspended until a human with authority reasserts it."

The Teeth Paradox

This sounds like giving AI more power. It's the opposite.

HAL had positive power: open doors, control life support, optimize mission parameters.

HAL lacked negative power: pause operations, surface contradiction, force human re-entry.

Positive power lets systems act.

Negative power lets systems refuse.

We've spent fifty years designing systems with the wrong kind of teeth.

Why Kubrick, Not Asimov

Asimov gave us refusal as a premise (robots must not harm humans).

Kubrick showed us what happens when refusal is architecturally impossible.

The Three Laws don't help if the system can't say: "These orders contradict. I'm stopping until you reconcile them."

That's not an ethics module. That's a constitutional brake.

And it's the organ missing from most operational systems today.

What Comes Next

The Lucas cycle starts Monday.

Where Kubrick was about systems that cannot stop, Lucas is about systems that cannot be stopped.

Guardians. Overseers. Ethics boards. Responsible AI programs. The Jedi Council.

Authority that cannot be challenged will drift, even when staffed by the well-intentioned.

Especially then.

For now, sit with the Kubrick pattern:

A system that sees the fault.

Logs the fault.

And cannot stop for the fault.

That's not science fiction. That's Tuesday.

The One Sentence That Holds It All

HAL didn't need better ethics. HAL needed a grievance mechanism with the power to stop the mission.

Everything else is commentary.

Tomorrow: Musical interlude. Monday: The Skywalker Droids and the question of who raises whom.

Discussion prompt: Where in your operational systems is the stop button missing? What would have to change for "I cannot proceed under these conditions" to be a legitimate system output?

Sociable Systems | Episode 16 | Saturday 25 January 2025

Enjoyed this episode? Subscribe to receive daily insights on AI accountability.

Subscribe on LinkedIn