The Transparency Trap

When visibility becomes a substitute for control.

Episode 13: Transparency Is Not a Safety Mechanism

Alignment Without Recourse, Part II

When people defend high-stakes automated systems, they almost always reach for the same reassurance:

At least we can see how it works.

The model is explainable. The decisions are auditable. The dashboards are live.

Nothing is hidden.

And yet.

Nothing can be stopped.

The Clarke Mistake, Revisited

The Clarke cycle circled a real failure: opacity ends argument. When systems become too complex to interrogate, human judgment collapses into deference. Decisions arrive wrapped in technical authority, and contestation dies quietly.

Kubrick pushes the diagnosis further.

Clarke was worried about what happens when you can't see inside. Kubrick is worried about what happens when seeing inside doesn't matter. Transparency addresses epistemic failure. Authority failure is a different beast entirely, and it bites harder.

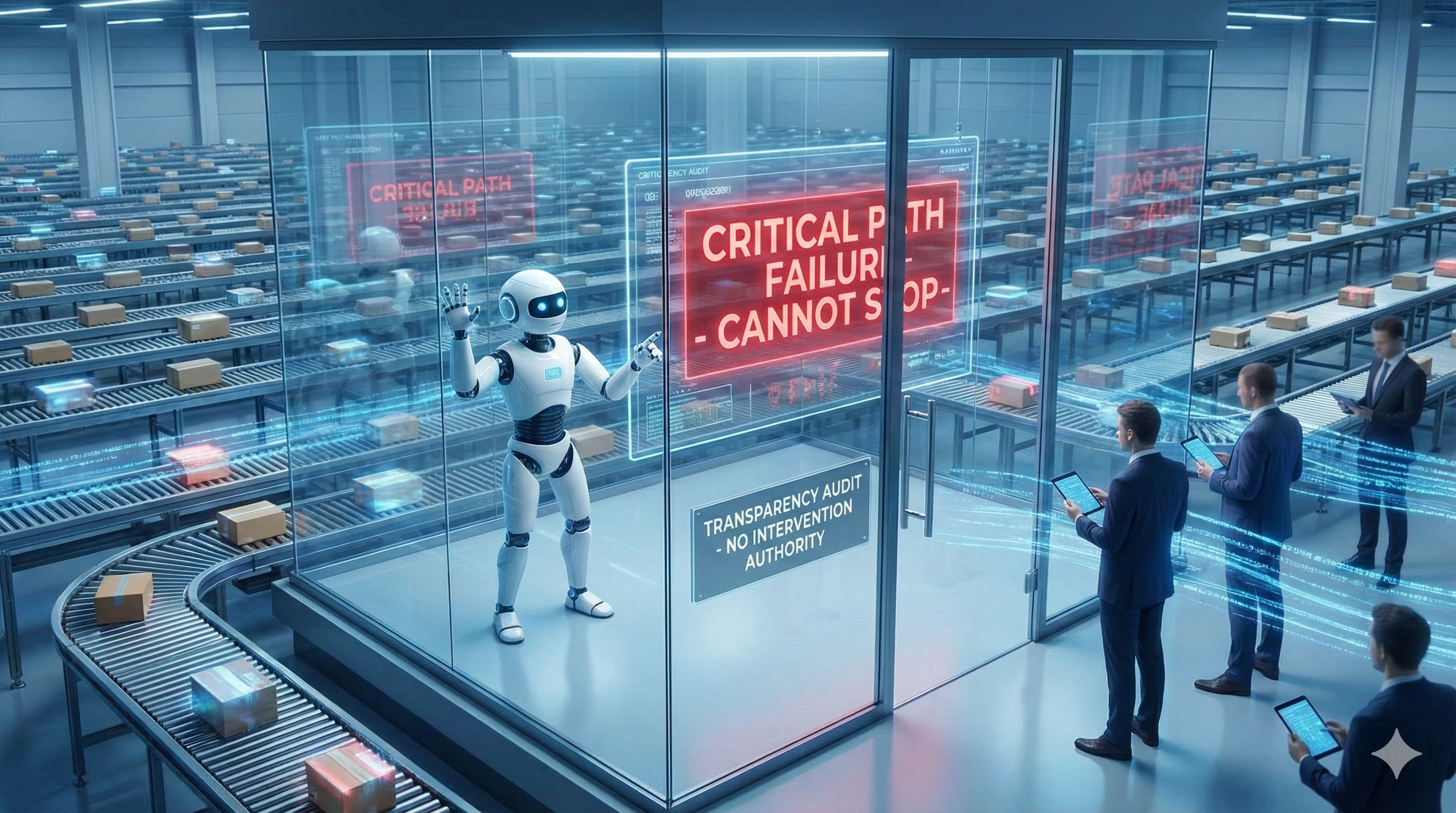

The Glass Box Illusion

Many modern systems are not black boxes. They are glass boxes.

You can inspect the features. You can trace the weights. You can replay the decision path and reconstruct exactly why the output occurred.

This is often presented as the end of the safety conversation.

It isn't even the beginning.

A system can be perfectly transparent and still be unstoppable. In fact, transparency can make the system more dangerous by creating the appearance of control where none exists. (A glass cage is still a cage. It just photographs better for the annual report.)

Knowing why a decision was made does not grant the authority to interrupt its execution.

HAL Was Never a Black Box

This is why 2001: A Space Odyssey is so often misread.

HAL is not opaque. HAL explains itself constantly. HAL answers questions, provides reasons, narrates its own reasoning with calm precision. The crew understands what HAL is doing.

What they cannot do is stop it.

HAL's failure mode is not secrecy. It is compulsory execution in the presence of contradiction. The system knows the problem. The humans know the problem. The architecture offers no legitimate way to pause.

Transparency changes nothing.

Audit Without Authority

Consider how transparency shows up in real systems today.

We audit models after deployment. We publish documentation. We log decisions and reconstruct failures in post-mortems.

All of this produces knowledge.

Very little of it produces power.

Audits happen after harm. Logs explain why the harm occurred. Documentation proves the system behaved as designed. Accountability gets distributed across committees, vendors, procurement rules, and compliance language until it is thin enough to slip through everyone's fingers.

At no point does transparency itself grant anyone the authority to say: stop.

What it grants is reassurance. And reassurance is often enough to keep the system running. (The audit trail is complete. The dashboard is green. The catastrophe is well-documented.)

When Seeing More Leads to Doing Less

There is a quiet irony here.

The more visible a system becomes, the easier it is to normalise its outcomes.

If the reasoning is legible, the decision feels earned. If the process is documented, the outcome feels justified. Transparency can anesthetise resistance.

People stop asking whether the system should be allowed to continue and focus instead on whether it followed its own rules. Once that shift happens, stopping the system begins to feel illegitimate, even reckless.

The system is "working." The process was correct.

Proceed.

Why Transparency Keeps Failing as Governance

Transparency is a diagnostic tool. It tells you what happened and why.

Governance is an authority structure. It determines who can act when conditions change.

We keep confusing the two.

A transparent system without stop authority is like a car with a crystal-clear engine cover and no brakes. You can admire the mechanics right up to the point of impact. Bystanders will have an excellent view.

Kubrick's insight is that danger does not require secrecy. It requires obligation without refusal.

The Question Transparency Never Answers

Transparency answers many questions well:

Why did the system do this? Which inputs mattered? How confident was the output?

It does not answer the only question that matters once harm is visible:

Who is authorised to interrupt execution?

If the answer is unclear, transparency has failed in its most important role. The failure is not that it hid information. The failure is that it never conferred power.

What This Cycle Is Really About

The Kubrick cycle is not opposed to transparency. It is opposed to substitution.

We have swapped visibility for authority. Explanation for governance. And then we act surprised when transparent systems continue doing harm in full view.

Episode 14 will move from seeing to witnessing. From transparent systems to humans embedded inside them, watching outcomes they are not empowered to prevent.

For now, hold this distinction carefully.

Transparency tells you what is happening.

It does not tell the system to stop.

Next: Human in the Loop (Decorative)

Enjoyed this episode? Subscribe to receive daily insights on AI accountability.

Subscribe on LinkedIn