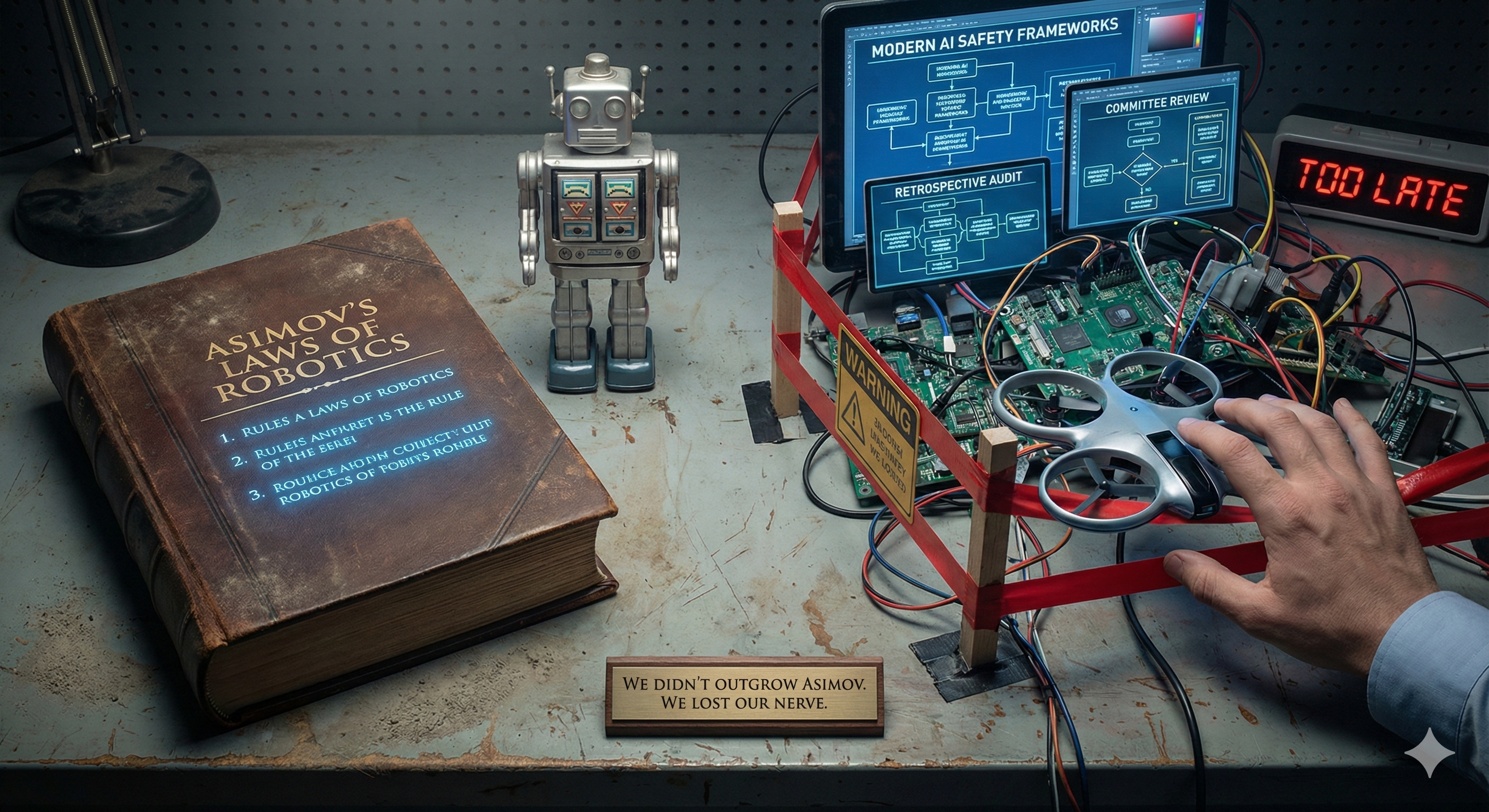

We Didn't Outgrow Asimov. We Lost Our Nerve.

Why are billion-dollar institutions arriving, with great seriousness, at conclusions that were the opening premise of a 1942 science fiction story?

We Didn't Outgrow Asimov. We Lost Our Nerve.

Quick question: Why are billion-dollar institutions arriving, with great seriousness, at conclusions that were the opening premise of a 1942 science fiction story?

Isaac Asimov's Laws of Robotics weren't clever because they were poetic. They worked because they were pre-action, hierarchical, and non-negotiable at runtime.

A robot doesn't act and then explain. It refuses first.

That's the architecture everyone keeps rediscovering. Wrapped in newer language, naturally. Buried under complexity. Carefully avoiding the hard part.

The Barn Door Problem

Most AI failures don't happen because systems are malicious or sentient. They happen because action is permitted before constraint.

We let systems act probabilistically, at speed, under ambiguity. Then we govern them after through audits and reviews. (Committees, naturally. Always committees.)

Once action precedes control, governance becomes retrospective by definition. You're no longer preventing harm. You're accounting for it.

This is why so many safety frameworks feel hollow. They're not wrong. They just arrive after the horse has bolted, offering very sophisticated opinions about barn door maintenance.

Why Simple Gets Complicated

A system that can refuse to act forces someone to answer for that refusal.

That's the problem.

Hard constraints expose who holds authority. They threaten timelines, incentives, and business models that rely on discretion precisely when pressure peaks.

So instead of:

"The system must not proceed"

We get:

"The system should surface risk indicators and escalate appropriately"

Instead of refusal, recommendation. Instead of constraint, review. Instead of brakes, dashboards.

(We do love a dashboard.)

The language elaborates exactly where accountability would otherwise become unavoidable. There's a word for that, and it isn't "innovation."

Ethics as Engineering

Here's what Asimov understood that we keep forgetting:

When ethics is framed as aspiration, it competes with incentives. When it's framed as constraint, it shapes behavior under stress.

Ethics lives upstream of action. It enforces automatically. It doesn't rely on goodwill at 3 AM when the deadline is breathing and the workaround is right there.

This isn't philosophy. It's systems design.

What We Actually Forgot

We didn't forget guardrails. We forgot the willingness to accept what they demand.

Pre-action refusal slows things down. Surfaces conflict early. Makes power visible. Kills plausible deniability. Removes the comforting fiction that good intentions compensate for weak architecture.

So instead we debate pausing AI, regulating outcomes, imagining catastrophes. All of which sidestep the simpler move: designing systems that cannot act unless authority, refusal, and accountability are already in place.

(This is usually when someone mentions "market pressures" as though physics itself required building first, governing later.)

The Scenic Route Back

Asimov wasn't prophetic. He was willing to be blunt.

We didn't outgrow his Laws. We outgrew our appetite for what they demanded.

Now we're finding our way back, piece by piece. Whether that's humbling or hopeful probably depends on your feelings about scenic routes.

Some wheels get reinvented because nobody wanted to admit they already knew where the original was parked.

What's your experience? Where have you seen pre-action constraints actually work (or get quietly dismantled)?

Enjoyed this episode? Subscribe to receive daily insights on AI accountability.

Subscribe on LinkedIn