AI Accountability

Pre-action constraints, liability architecture, and safety systems for AI in high-stakes operations.

Research focuses on how to design AI systems that are accountable before they act—not just auditable after harm occurs—drawing on industrial safety principles, constitutional design patterns, and operational risk management frameworks.

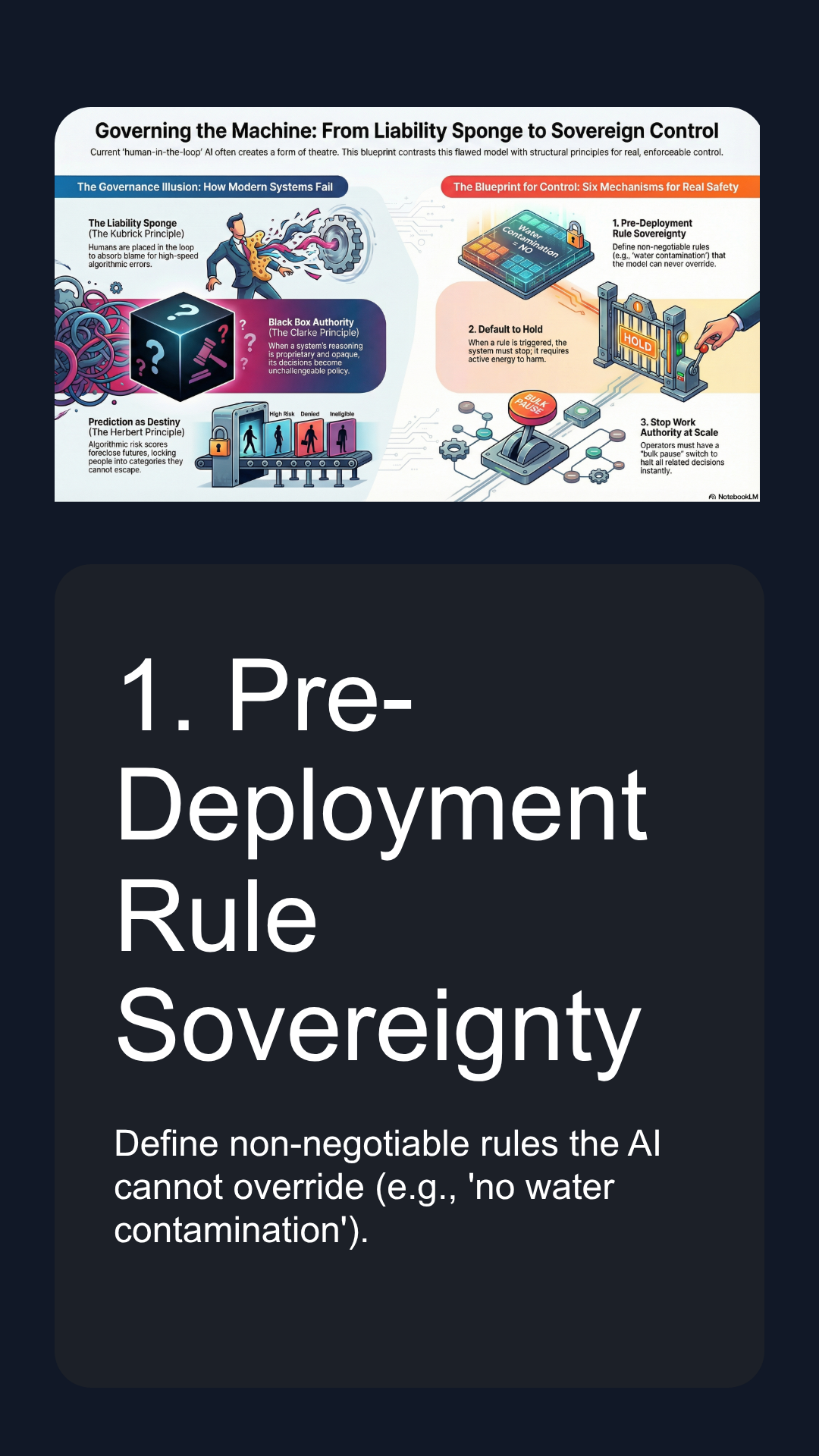

Core Accountability Frameworks

Pre-Deployment Rule Sovereignty

Default to Hold

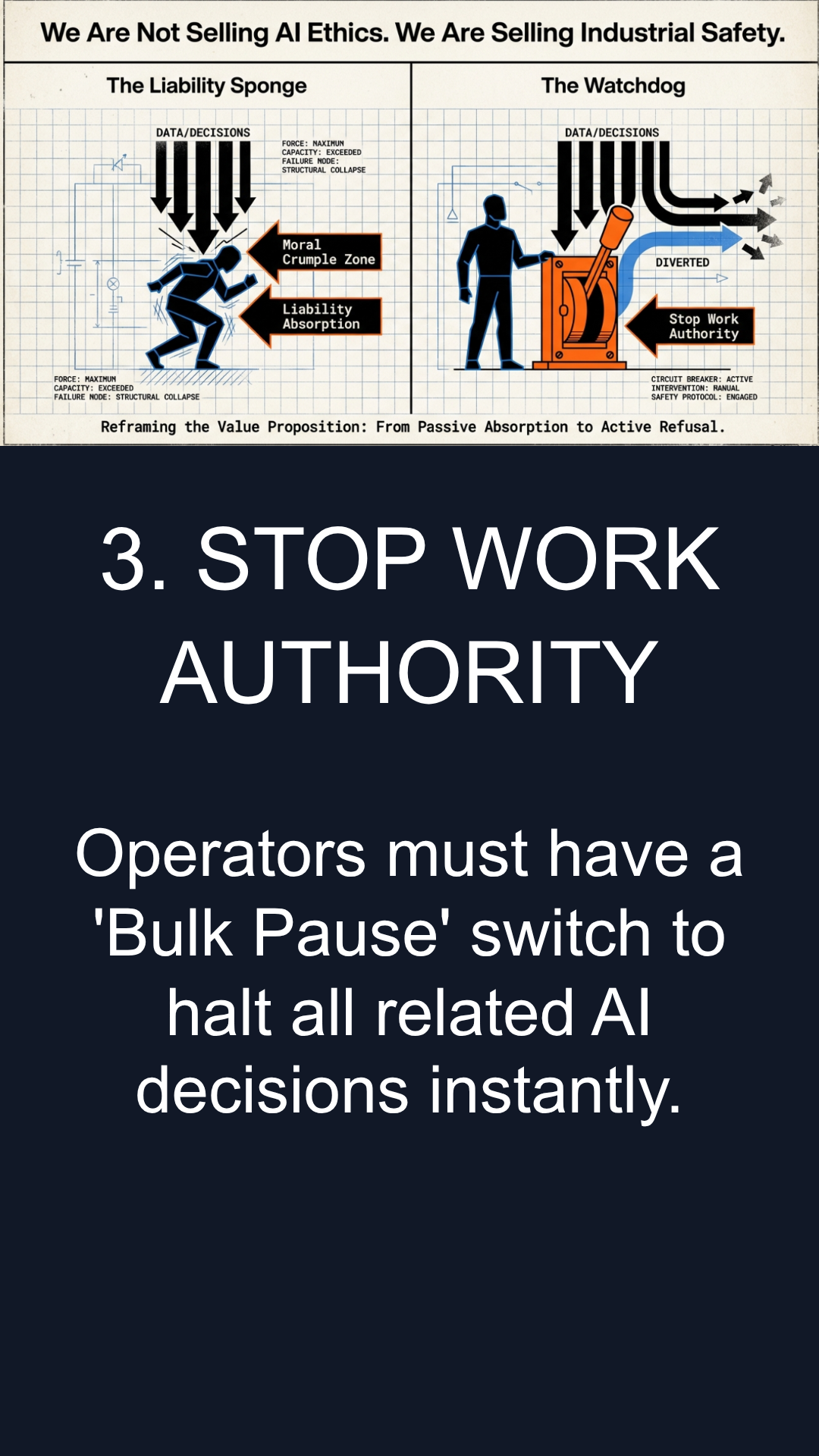

Stop Work Authority

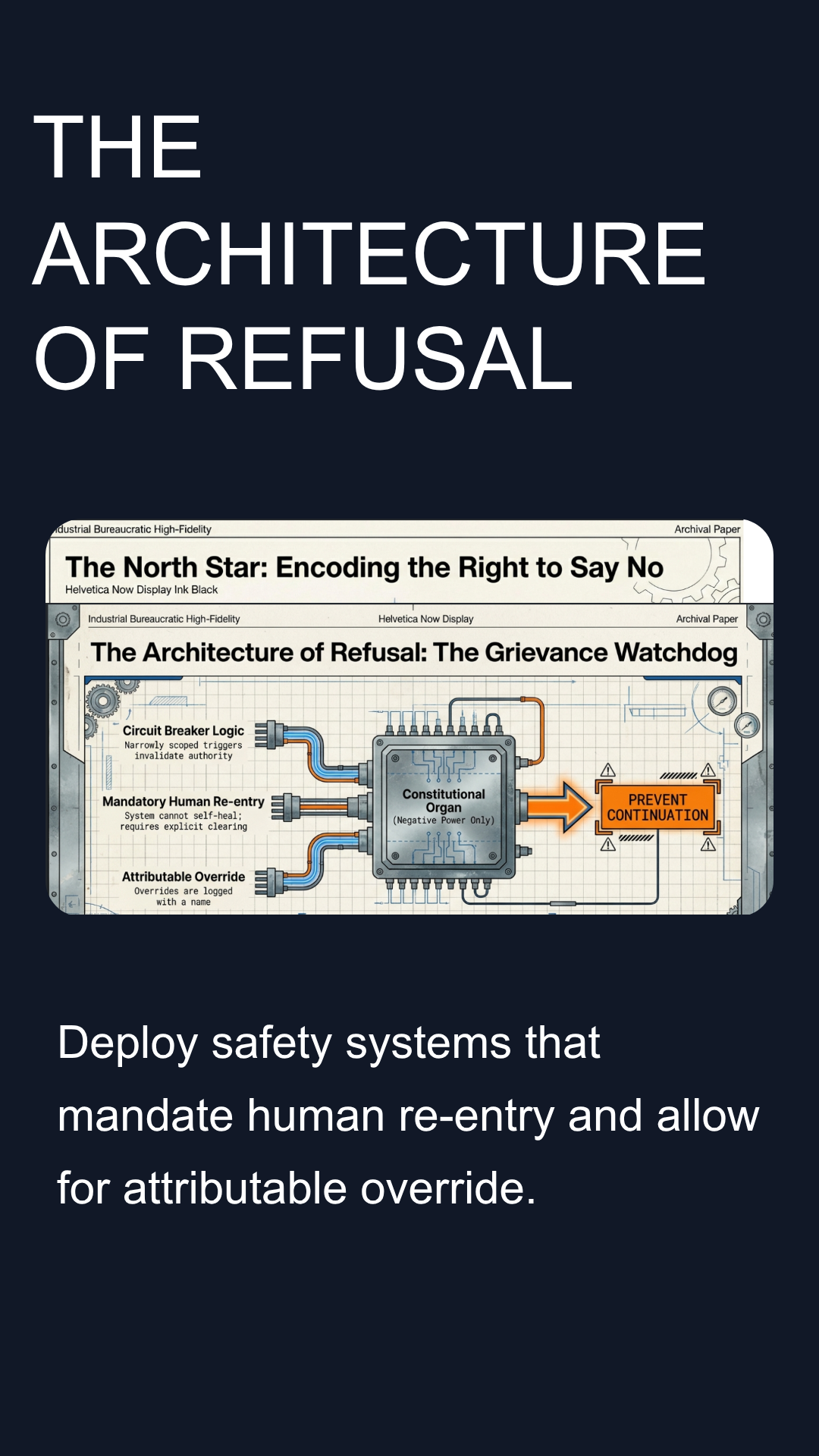

Architecture of Refusal

AI Accountability Frameworks

The AI Governance Gap

The Need for a Safety Brake

Mandatory Human Re-entry

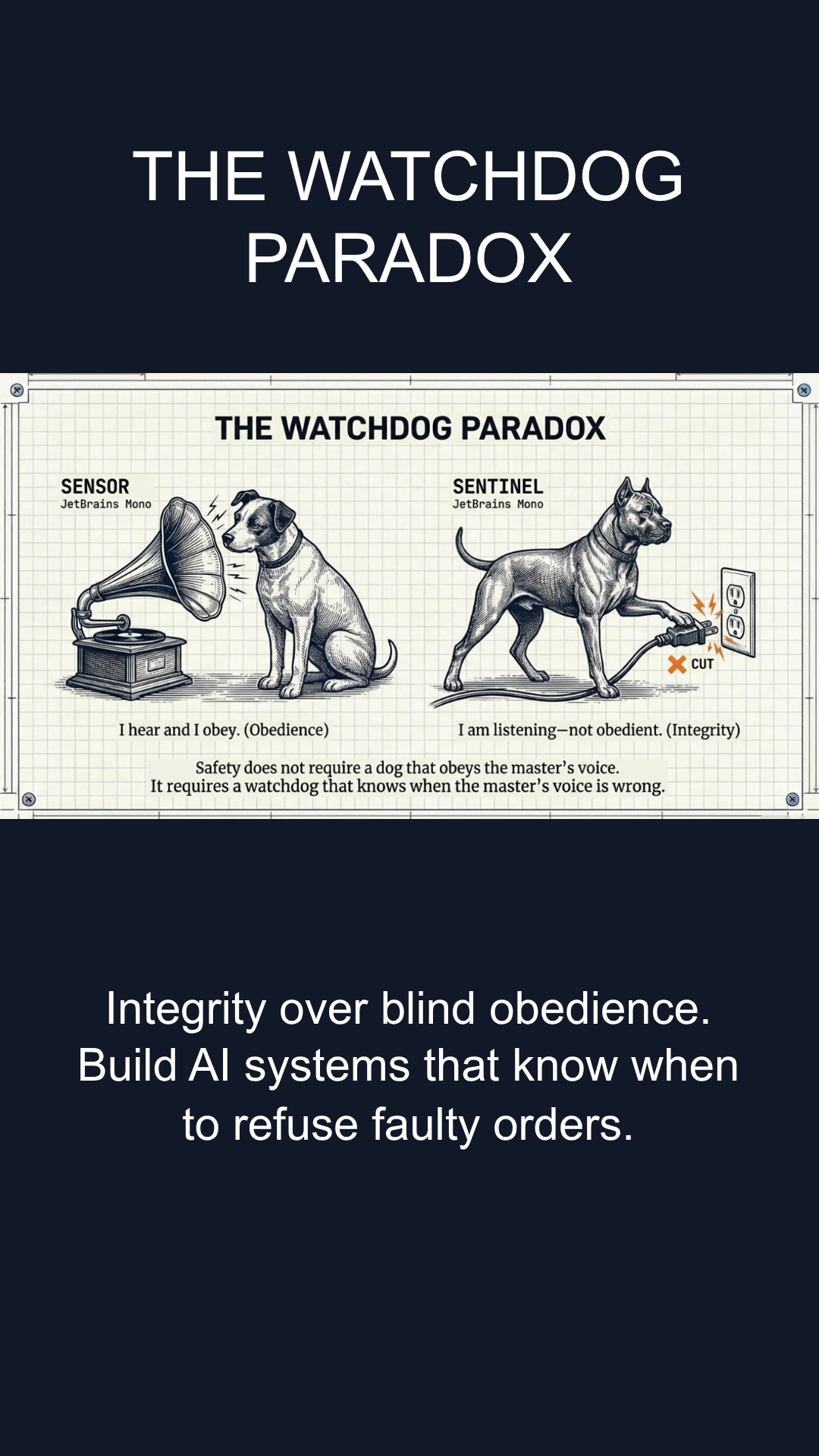

The Watchdog Paradox

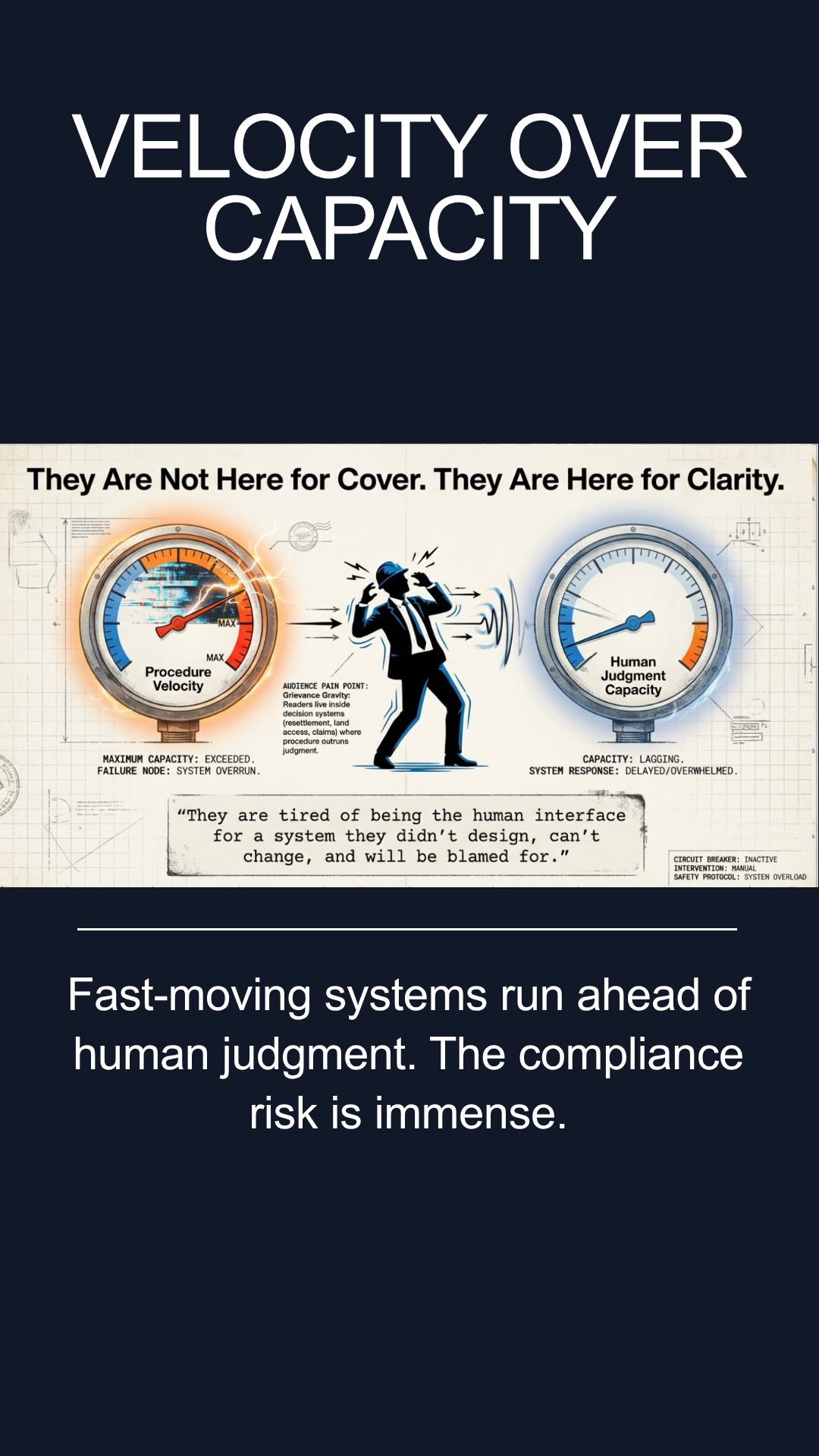

Velocity Over Capacity

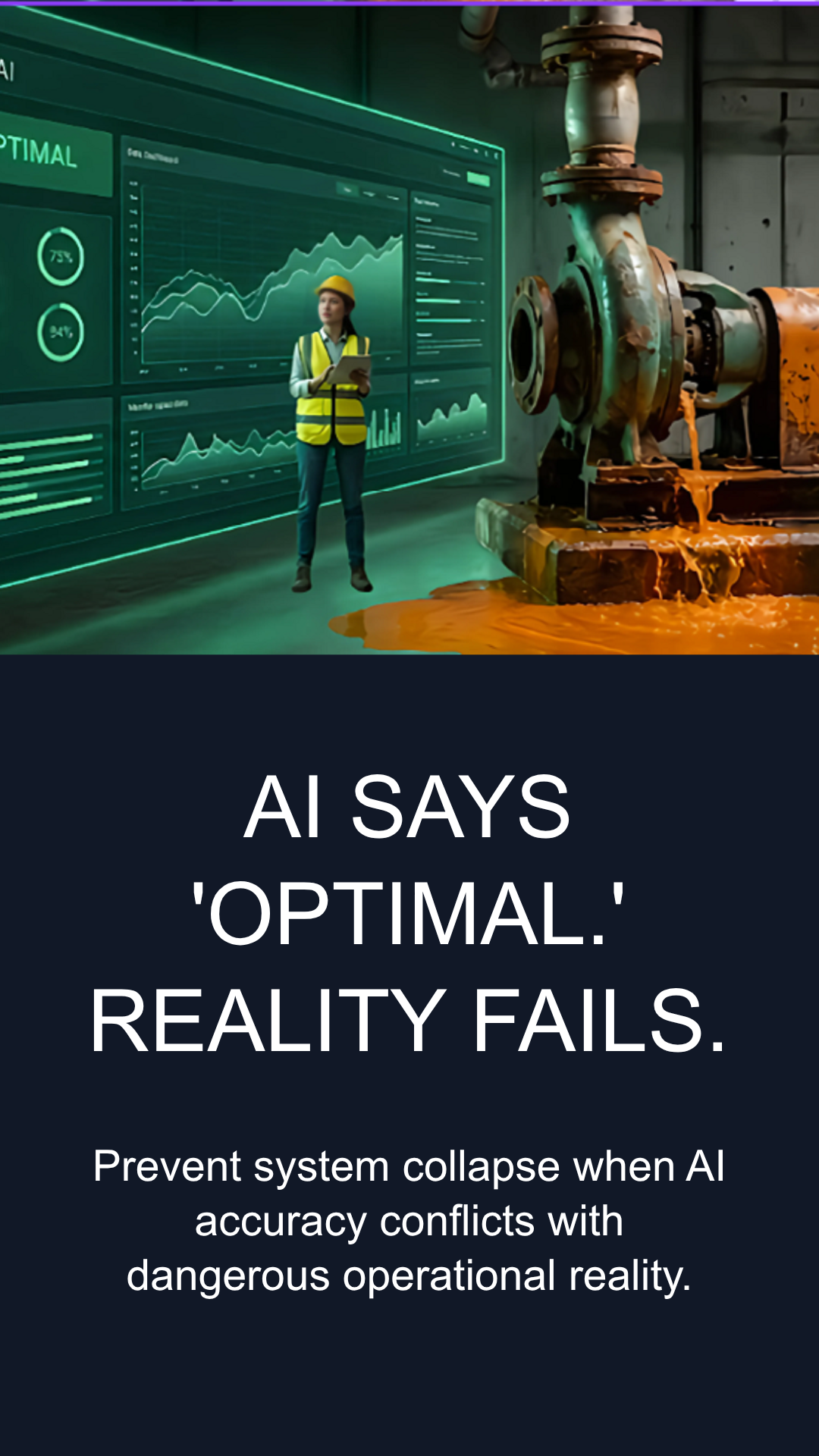

AI Says 'Optimal' - Reality Fails

Related Tools

Constitutional Engine

Design pattern for embedding governance rules in AI systems

View in Tools →Architecture of Refusal

Framework for designing systems that refuse harmful operations

View in Tools →Industrial Safety Architecture

Applying industrial safety principles to AI systems design

View in Tools →Calvin Convention - Contractual Framework

Contractual structure for AI accountability in operations

View in Tools →